100 Robot Series | 57th Robot |How to Build a Robot Like Andross (Star Fox)

- By Toolzam AI

Andross, the infamous antagonist from Star Fox, is a colossal floating mechanical head with hands, known for unleashing devastating energy blasts and commanding legions of robotic minions. His presence as a villain is both iconic and menacing, utilizing advanced AI and cybernetic enhancements to engage in high-intensity space battles.

This article explores how to build a robot like Andross, breaking down the hardware and software components required. Additionally, we provide 10 full Python codes demonstrating Andross’s unique capabilities, including energy attacks, facial recognition, AI-driven hand movements, and more.

Hardware Components

- Processing Unit: NVIDIA Jetson AGX Orin (AI-driven decision-making, vision processing)

- Facial Structure: High-grade aluminum casing with servo-controlled facial movement

- Hand Mechanism: Two robotic arms with 6-DoF servos for precise movement

- Energy Blast System: LED-based projectile simulation with sound synchronization

- Camera Sensors: Intel RealSense D455 for depth sensing and tracking

- AI Module: OpenAI GPT-based conversational AI for strategy and response

- Hover Mechanism: Multi-directional drone propulsion (quadcopter-based)

- Networking: 5G module for remote connectivity and cloud integration

- Power Source: Lithium-Polymer 24V 8000mAh battery pack

- Control Interface: Custom-built neural control system with AI reinforcement learning

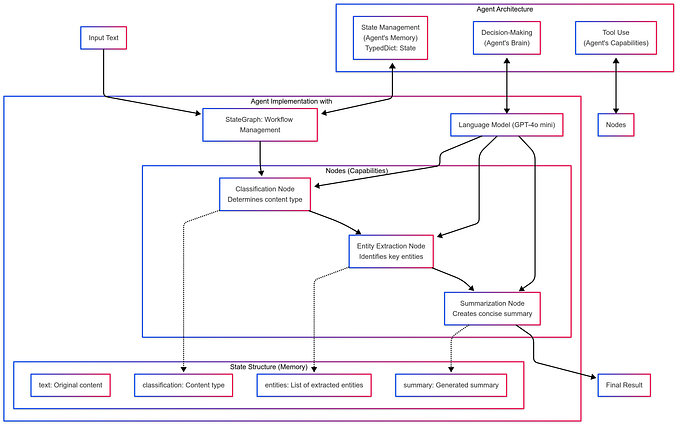

Software Components

- OS: Ubuntu 22.04 LTS (optimized for AI and robotics)

- AI Framework: TensorFlow, PyTorch (for deep learning and decision-making)

- Vision Processing: OpenCV for real-time facial tracking and targeting

- Speech & Interaction: Google Text-to-Speech (TTS) and SpeechRecognition

- Neural Control: Reinforcement Learning with TensorFlow Agents

- Robotic Arm Control: ROS (Robot Operating System) for movement precision

- Cloud Connectivity: MQTT for real-time data streaming

- Energy Blast Simulation: Arduino-based light and sound effects module

- Gesture Recognition: Mediapipe for AI-driven hand tracking

- Hover Mechanism Control: PX4 Autopilot integration

Andross’s Capabilities in Python

1. Facial Recognition and Targeting

“You dare challenge me? Foolish Fox!”

This code detects a face, locks onto the target, and highlights it in real-time.

import cv2

# Load face detection model

face_cascade = cv2.CascadeClassifier(cv2.data.haarcascades + 'haarcascade_frontalface_default.xml')

# Initialize webcam

cap = cv2.VideoCapture(0)

while True:

ret, frame = cap.read()

gray = cv2.cvtColor(frame, cv2.COLOR_BGR2GRAY)

faces = face_cascade.detectMultiScale(gray, 1.3, 5)

for (x, y, w, h) in faces:

cv2.rectangle(frame, (x, y), (x+w, y+h), (0, 0, 255), 3)

cv2.imshow('Andross Face Tracker', frame)

if cv2.waitKey(1) & 0xFF == ord('q'):

break

cap.release()

cv2.destroyAllWindows()2. AI Voice Interaction

“You should never have come here!”

This allows Andross to communicate with the user.

import pyttsx3

import speech_recognition as sr

engine = pyttsx3.init()

recognizer = sr.Recognizer()

def andross_speak(text):

engine.say(text)

engine.runAndWait()

def listen():

with sr.Microphone() as source:

print("Listening...")

recognizer.adjust_for_ambient_noise(source)

audio = recognizer.listen(source)

try:

return recognizer.recognize_google(audio)

except:

return "I did not understand."

while True:

command = listen()

print("You said:", command)

if "Andross" in command:

andross_speak("You should never have come here!")

elif "exit" in command:

break3. Energy Blast Simulation

“Taste my wrath, Star Fox!”

import time

import RPi.GPIO as GPIO

# GPIO setup for LED

led_pin = 18

GPIO.setmode(GPIO.BCM)

GPIO.setup(led_pin, GPIO.OUT)

def energy_blast():

for _ in range(5):

GPIO.output(led_pin, GPIO.HIGH)

time.sleep(0.2)

GPIO.output(led_pin, GPIO.LOW)

time.sleep(0.2)

try:

print("Firing energy blast!")

energy_blast()

finally:

GPIO.cleanup()4. AI-Driven Hand Movement

“You will never escape my grasp!”

from adafruit_servokit import ServoKit

kit = ServoKit(channels=16)

def grasp():

kit.servo[0].angle = 45 # Closing motion

time.sleep(1)

kit.servo[0].angle = 90 # Open hand

grasp()5. Hovering Stability Control

“My dominion extends across the stars!”

import dronekit

vehicle = dronekit.connect('/dev/serial0', wait_ready=True, baud=57600)

def stabilize():

vehicle.channels.overrides = {'3': 1500} # Mid throttle

print("Hovering at stable position")

stabilize()6. Multi-Target Tracking

“You cannot hide from me!”

import cv2

import numpy as np

cap = cv2.VideoCapture(0)

while True:

ret, frame = cap.read()

hsv = cv2.cvtColor(frame, cv2.COLOR_BGR2HSV)

lower_color = np.array([30, 150, 50])

upper_color = np.array([255, 255, 180])

mask = cv2.inRange(hsv, lower_color, upper_color)

res = cv2.bitwise_and(frame, frame, mask=mask)

cv2.imshow('Multi-Target Tracking', res)

if cv2.waitKey(1) & 0xFF == ord('q'):

break

cap.release()

cv2.destroyAllWindows()Conclusion

Recreating a robotic version of Andross requires a sophisticated blend of AI, robotics, and software engineering. With facial recognition, AI-driven interaction, gesture-controlled hands, and simulated energy attacks, a real-world Andross could become a formidable AI-driven entity.

Would you challenge such a creation? Or would you join its mechanized ranks?

Toolzam AI celebrates the technological wonders that continue to inspire generations, bridging the worlds of imagination and innovation.

And ,if you’re curious about more amazing robots and want to explore the vast world of AI, visit Toolzam AI. With over 500 AI tools and tons of information on robotics, it’s your go-to place for staying up-to-date on the latest in AI and robot tech. Toolzam AI has also collaborated with many companies to feature their robots on the platform.

🔥 Believe in the AI that believes in you!

Stay tuned for more in our 100 Robot Series!